We usually hide habits that are destructive or shameful.

Rarely do we hide things that make us better at our jobs.

Yet, a strange dynamic has settled over the creative economy. We have discovered tools that dramatically increase output and quality but using them is increasingly treated like a character flaw.

Efficiency has become a dirty secret.

The Shadow Workflow

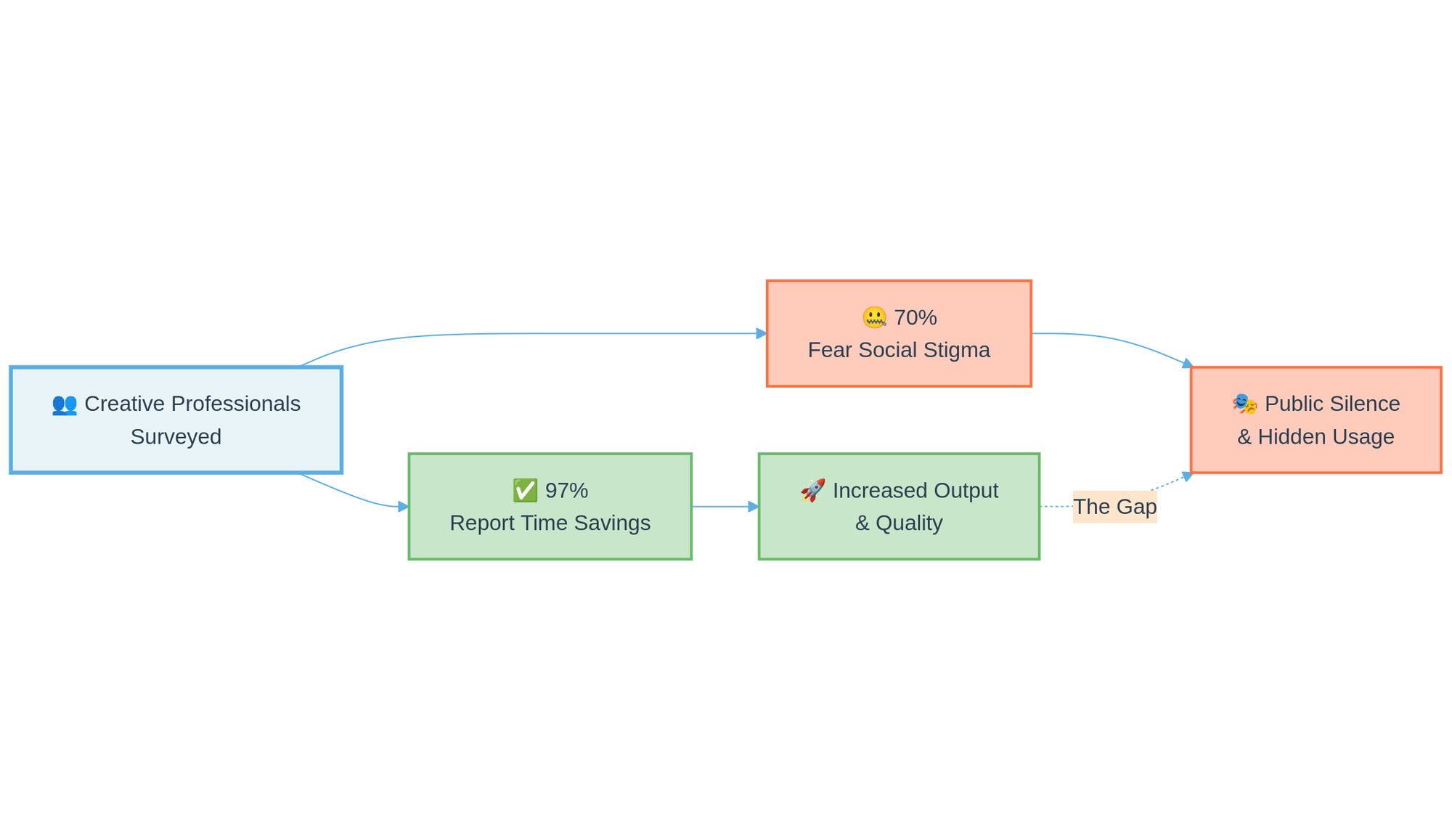

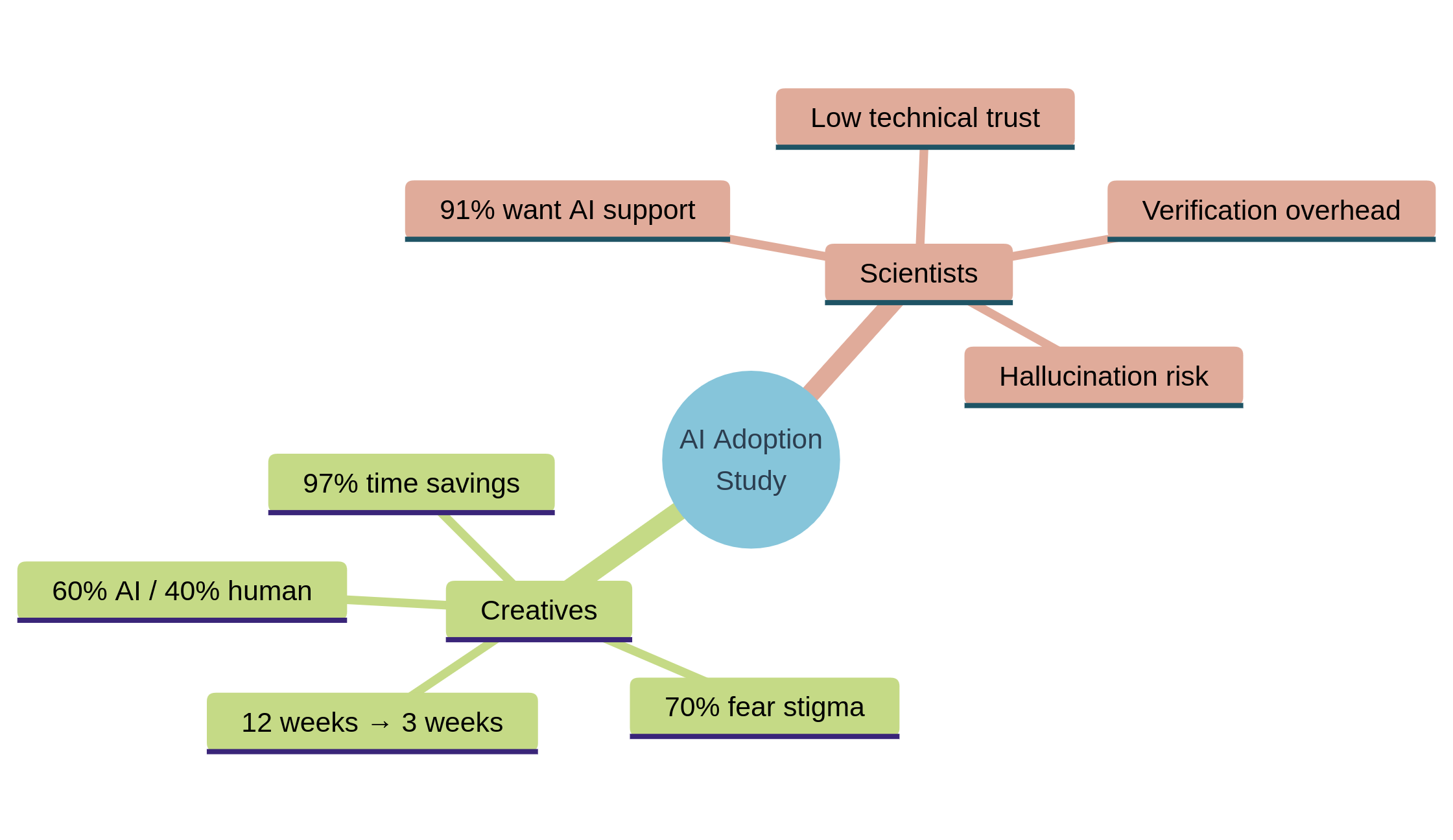

Anthropic recently deployed an AI research agent to interview 1,250 professionals about their relationship with generative tools.

The results expose a massive disconnect between private behavior and public image.

Among creative professionals, 97% reported that AI saves them time. Many noted that it transformed their output, with one photographer reducing a 12-week editing cycle to three weeks.

However, 70% of these same professionals fear social stigma if they admit to using these tools.

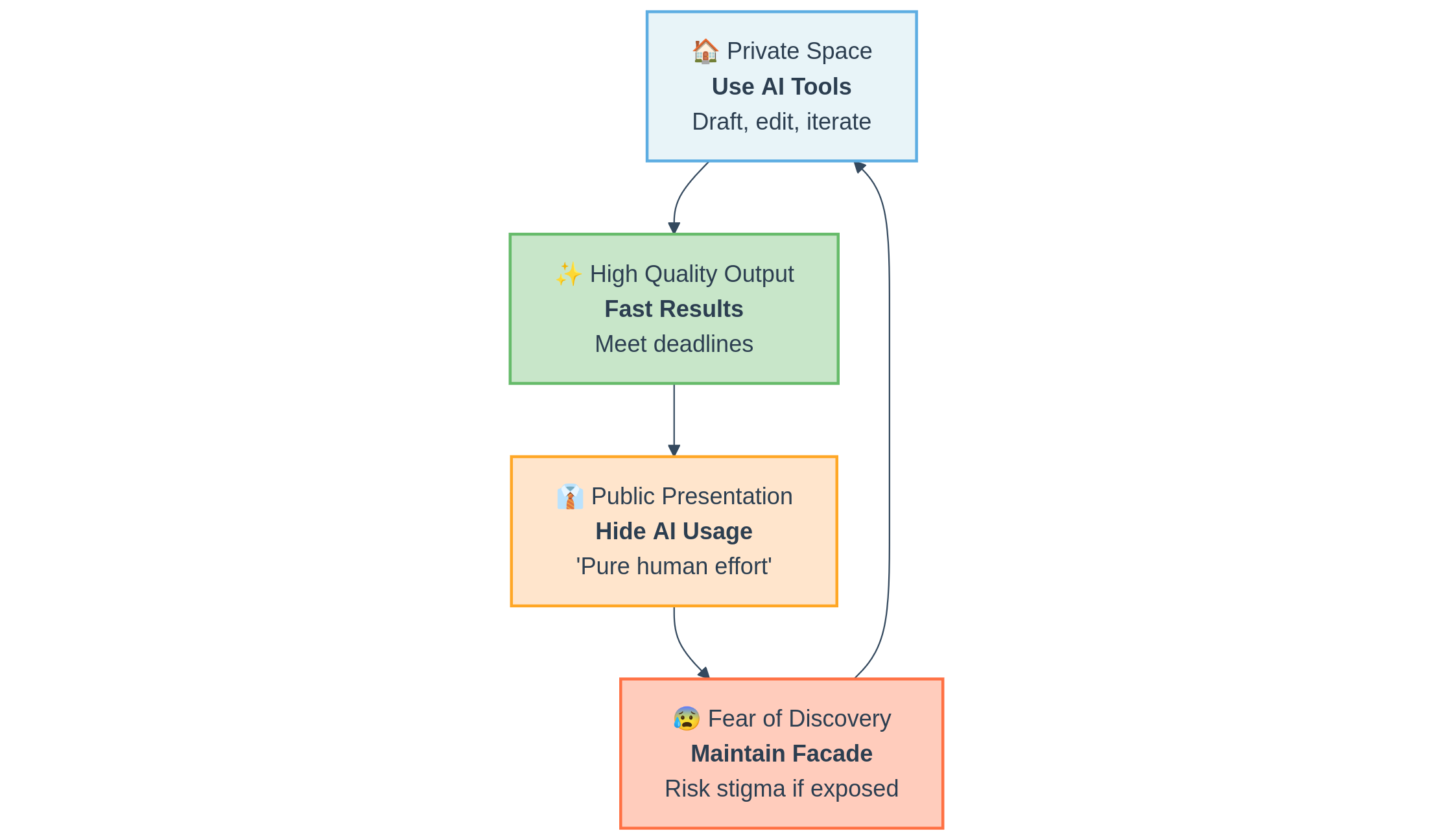

They are building what we might call a "Shadow Workflow." In the privacy of their home offices, they utilize AI to draft, edit, and iterate. But when they step into the collaborative light of the workplace, they remain silent, fearing judgment from colleagues or clients who view "AI-assisted" as a synonym for "lazy" or "stolen."

The Trust Paradox

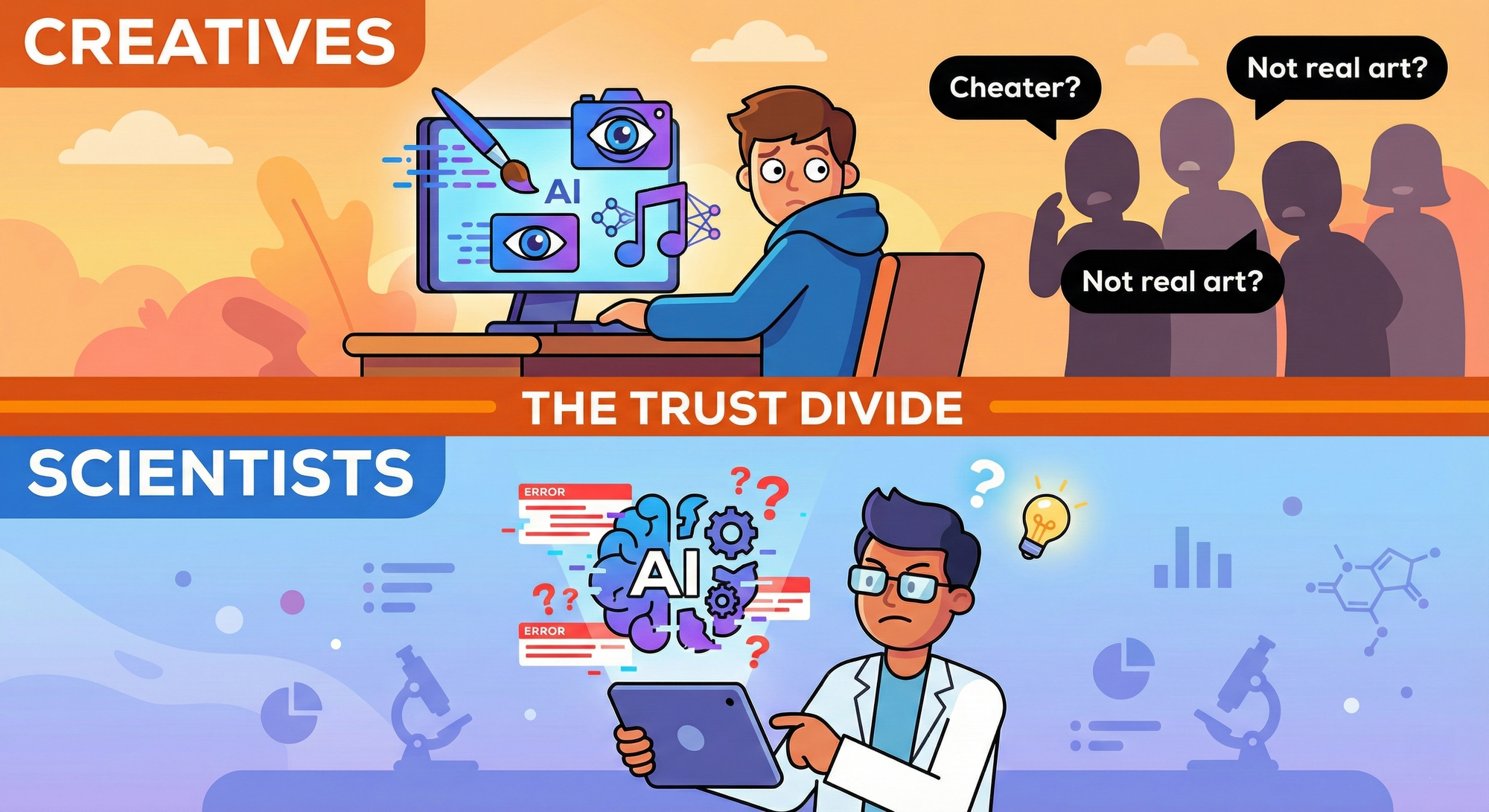

While creatives are hiding their usage, scientists are struggling to start.

The study highlights a sharp divergence in how different fields view utility:

- Creatives struggle with social trust. The tools work well, but the culture rejects them.

- Scientists struggle with technical trust. The culture desires the help (91% want more AI support), but the tools aren't reliable enough yet.

For a mathematician or researcher, an AI that hallucinates facts is a liability. If it takes as long to verify the AI’s work as it does to do the work yourself, the value proposition collapses.

Creatives face the opposite problem: the tool is useful, but the optics are dangerous.

The Misunderstanding

The prevailing narrative suggests a binary war: Humans vs. Machines.

We see headlines about artists protesting scrapers and writers striking against automation.

This suggests that the creative class has universally rejected AI.

The reality is far more nuanced. The creative class is not rejecting AI; they are rejecting the reputation of AI.

They are adopting the technology at high rates to survive in a marketplace that demands speed, yet they must maintain the façade of artisanal purity to protect their brand. They are trapped in a performance of "pure human effort" while secretly relying on machine leverage to meet deadlines.

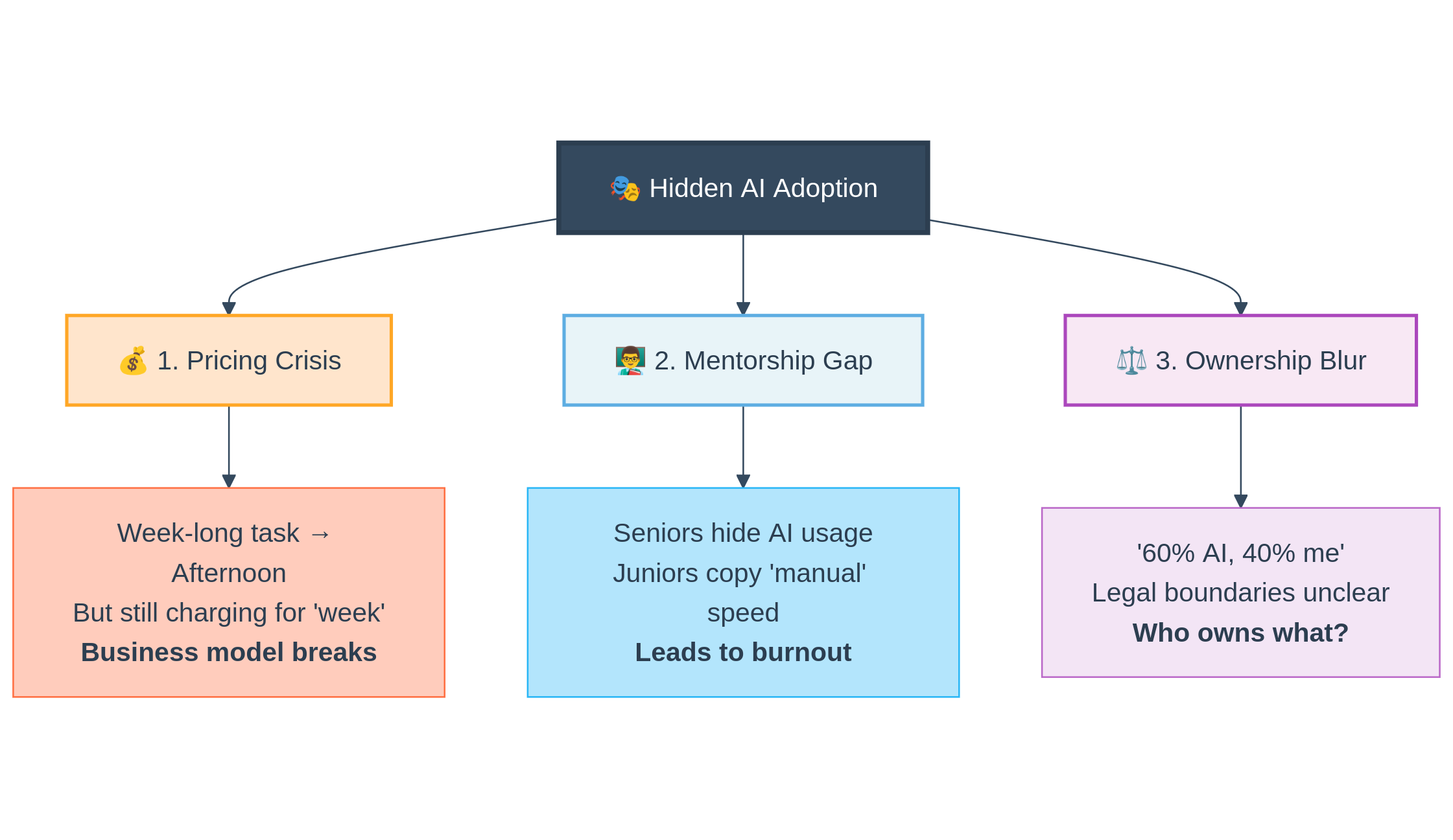

Second-Order Effects

If this "closeted" adoption continues, we risk stalling the maturation of the industry.

1. The Pricing Crisis

Creative work has historically been billed based on time and effort (e.g., day rates). If a task that cost $2,000 and took a week now takes an afternoon, the business model breaks. If creatives hide their tools, we cannot have an honest conversation about new pricing models based on value rather than hours.

2. The Mentorship Gap

Junior employees learn by watching seniors work. If senior creatives hide their use of AI, juniors will inherit a distorted view of the workflow. They will try to replicate "manual" speeds that no longer exist, leading to burnout.

3. The Dilution of Ownership

The study noted that boundaries are blurring. One artist admitted the split was "60% AI, 40% my ideas." When usage is hidden, we avoid the difficult legal and ethical task of defining where the tool ends and the artist begins.

Signal Over Noise

We are moving toward a marketplace where the "how" matters less than the "what."

But until we stop treating efficiency as a scandal, we force our most productive workers to operate in the dark.

Is the value of creative work found in the struggle to produce it, or in the impact of the final result?